What is Cisco ACI fabric forwarding? The Cisco Application Centric Infrastructure (ACI) allows applications to define the network infrastructure. It is one of the most important aspects in Software Defined Network or SDN. The ACI architecture simplifies, optimizes, and accelerates the entire application deployment life cycle. The network services include routing and switching, QoS, load balancing, security and etc. In this session, I will explain what is Cisco ACI fabric forwarding.

Overview of ACI Fabric

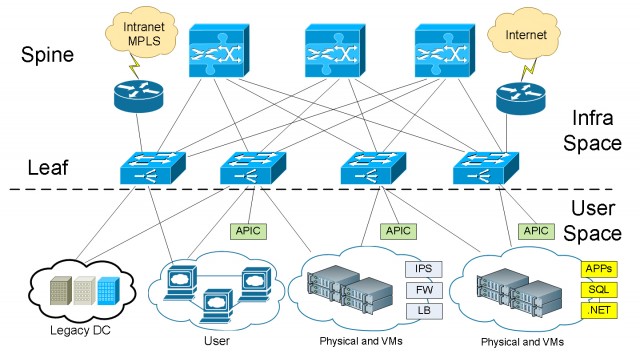

Let’s first understand the basic concepts. Cisco ACI leverages the “Spine” and “Leaf” also known as Clos architecture to deliver network traffic.

The Cisco Application Policy Infrastructure Controller (APIC) API enables applications to directly connect with a secure, shared, high-performance resource pool that includes network, compute, and storage capabilities.

Advantages of Spine and Leaf Architecture

The ACI fabric “Spine and Leaf” architecture offers us a linear scale in both performance and cost’s perspective. With the Spine and Leaf architecture, when you need more servers or device connectivity, you simply add a Leaf. You can add leaves up to the capacity of your Spine. When you need more redundancy or more paths for bandwidth within a fabric, you simply add more Spines.

Basic ACI Fabric Wiring Layout

We typically connect a Leaf to every Spine. A Leaf never connects to another Leaf, as a Spine never connects to another Spine. Everything else in your network connects to one or several Leaves for redundancy and HA.

Within the ACI architecture, there are two different spaces where we are looking at traffic. We have the infrastructure space and the user space. The user space can consist of a single organization, or scaling up to 64,000 tenants or customers from a service provider’s perspective.

Virtual or physical devices such as VM hosts, Firewalls, IPS/IDS appliances, they all connect to the Leaves. We can also connect our external networks. ACI can work with your existing infrastructure. Whatever networks in your existing network, you can connect to the ACI fabric. For example, we connect the Internet and Intranet CE routers to the Leaf layer of the ACI fabric.

What is VxLAN

Inside the ACI infrastructure, we utilize VxLAN, or Virtual Extensible LAN.

In a traditional network, VLANs provide logical segmentation of Layer 2 boundaries or broadcast domains. However, due to the inefficient use of available network links with VLAN use, rigid requirements on device placements in the data center network, and the limited scalability to a maximum 4094 VLANs, using VLANs has become a limiting factor to large enterprise networks and cloud service providers as they build large multitenant data centers.

The Virtual Extensible LAN (VxLAN) has a solution to the data center network challenges posed by traditional VLAN technology. The VxLAN standard provides for the elastic workload placement and higher scalability of Layer2 segmentation that is required by today’s application demands. Compared to traditional VLAN, VxLAN offers the following benefits:

- Flexible placement of network segments throughout the data center. It provides a solution to extend Layer 2 segments over the underlying shared network infrastructure so that physical location of a network segment becomes irrelevant.

- Higher scalability to address more Layer 2 segments. VLANs use a 12-bit VLAN ID to address Layer 2 segments, which results in limiting scalability of only 4094 VLANs. VxLAN uses a 24-bit segment ID known as the VxLAN Network Identifier (VNID), which enables up to 16 million VXLAN segments to coexist in the same administrative domain.

- Layer 3 overlay topology. Better utilization of available network paths in the underlying infrastructure. VLAN uses the Spanning Tree Protocol for loop prevention, which ends up not using half of the network links in a network by blocking redundant paths. In contrast, VxLAN packets are transferred through the underlying network based on its Layer 3 header and can take complete advantage of Layer 3 routing, equal-cost multipath (ECMP) routing, and link aggregation protocols to use all available paths.

You can read more about VxLAN technology here: VXLAN Overview: Cisco Nexus 9000 Series Switches

Traffic Flow in ACI Fabric

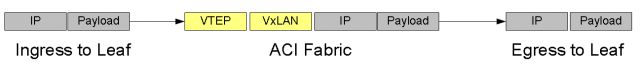

User traffic is encapsulated from the user space into VxLAN and use the VxLAN overlay to provide layer 2 adjacency when need to. So we can emulate the layer 2 connectivity while providing the extensibility of VxLAN for scalability and flexibility.

When traffic comes in to the infrastructure from the user space, that traffic can be untagged frames, 802.1Q trunk, VxLAN or NVGRE. We want to take any of this traffic and normalize them when entering into the ACI fabric. When traffic is received from a host at the Leaf, we translate the frames to VxLAN and transport to the destination on the fabric. For instance we can transport Hyper-V servers using Microsoft NVGRE. We take the NVGRE frames and encapsulate with VxLAN and send to their destination Leaf. We can do it between any VM hypervisor workload and physical devices, whether they are physical bare metal servers or physical appliances providing layer 3 to 7 services. So the ACI fabric gives us the ability to completely normalize traffic coming from one Leaf and send to another (it can be on the same Leaf). When the frames exit the destination Leaf, they are re-encapsulated to whatever the destination network is asking for. It can be formatted to untagged frames, 802.1Q truck, VxLAN or NVGRE. The ACI fabric is doing the encapsulation, de-capsulation and re-encapsulation in line rate. The fabric is not only providing layer 3 routing within the fabric for packets to move around, it is also providing external routing to reach the Internet and Intranet routers.

- All traffic within the ACI Fabric is encapsulated with an extended VxLAN header along with its VTEP, VxLAN Tunnel End Point.

- User space VLAN, VxLAN, NVGRE tags are mapped at the Leaf ingress point with a Fabric internal VxLAN. Note here the Fabric internal VxLAN acts like a wrapper around whatever frame formats coming in.

- Routing and forwarding are done at the Spine level, often utilizes MP-BGP.

- User space identities are localized to the Leaf or Leaf Port, allowing re-user and/or translation if required.

When we look at connecting the existing datacenter networks into ACI, what we do is accepting either typical subnets on any given VRF or a VLAN for any given device externally. We then translate them into the ACI fabric as external entities or groups that could be in parts of the Application Centric Infrastructure that we use building out the logical model.

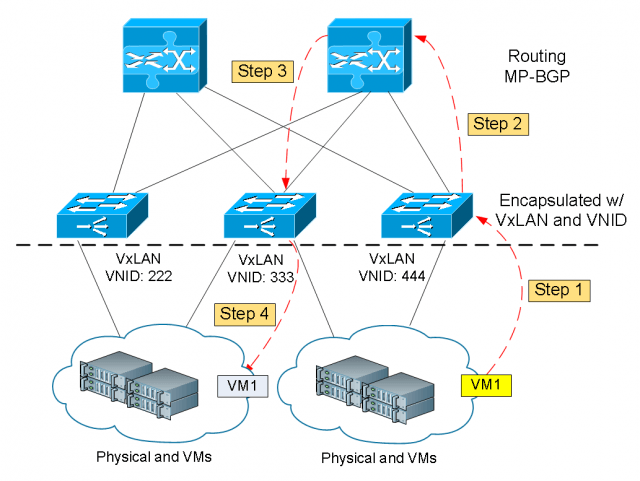

Location Independent

VxLAN not only eliminates Spanning Tree Protocol, it also allows us to have location independent within the fabric. The IP address itself is intended to identify a device for forwarding purpose. Within the ACI fabric, we take a device IP and map it to a VxLAN ID or VNID. It helps us to identify where the packet is located at any given time. What it means is that with any virtual machine host, it is identified by an IP address within that server and the VNID at the ACI Leaf. If this host were to migrate to a VM hypervisor at a different location within our ACI fabric, its VNID is replaced by the destination Leaf’s VNID and forwarded over. Now the ACI fabric knows that the VM with the same IP is the same host, simply relocated to a different location. This allows us to provide very robust forwarding to a device while still maintain the flexibility provided by workload mobility. This gives us a very robust ACI fabric, extremely scalable, and allows us to provide mobility within user space across the infrastructure space at any given end point.

In the diagram above, let’s say we are migrating VM1 from the server farm on the right to the left. These steps are followed:

- Step 1: VM1 is sent to the Leaf where it is directly connected. The frames are normalized and encapsulating into VxLAN format.

- Step 2: VM1 is identified by its IP address within the server and the VxLAN Network Identifier (VNID) of the Leaf it is sitting on right now. The Leaf is then forward the packet to the Spine for forwarding decision making.

- Step 3: The Spine router replaces its VNID with the destination Leaf’s VNID and sends it over.

- Step 4: The destination Leaf receives the packets and strips off the VxLAN wrapper then forward to the new server farm. From the network’s perspective VM1 is still the same host with the same IP address.

ACI Fabric Scalability

The scalability of the fabric is based on the Spine and Leaf design. With this design, we get a linear scale from both performance and cost perspective. It is a cost-effective approach as we grow the network. A network can be as small as less than hundred ports to up to a hundred thousand 10G ports and million end points.

The post What is Cisco ACI Fabric appeared first on Speak Network Solutions, LLC.